Linux Integration Guide

Version 2.4.1

Published December, 2019

Legal Notices

©

Copyright 2019 Hewlett Packard Enterprise Development LP. All rights reserved worldwide.

Notices

The information contained herein is subject to change without notice. The only warranties for Hewlett Packard

Enterprise products and services are set forth in the express warranty statements accompanying such products

and services. Nothing herein should be construed as constituting an additional warranty. Hewlett Packard Enterprise

shall not be liable for technical or editorial errors or omissions contained herein.

Confidential computer software. Valid license from Hewlett Packard Enterprise required for possession, use, or

copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documen-

tation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor's standard

commercial license.

Links to third-party websites take you outside the Hewlett Packard Enterprise website. Hewlett Packard Enterprise

has no control over and is not responsible for information outside the Hewlett Packard Enterprise website.

Acknowledgments

Intel

®

, Itanium

®

, Pentium

®

, Intel Inside

®

, and the Intel Inside logo are trademarks of Intel Corporation in the

United States and other countries.

Microsoft

®

and Windows

®

are either registered trademarks or trademarks of Microsoft Corporation in the United

States and/or other countries.

Adobe

®

and Acrobat

®

are trademarks of Adobe Systems Incorporated. Java

®

and Oracle

®

are registered trade-

marks of Oracle and/or its affiliates.

UNIX

®

is a registered trademark of The Open Group.

Publication Date

Tuesday December 10, 2019 15:18:04

Document ID

gob1536692193582

Support

All documentation and knowledge base articles are available on HPE InfoSight at https://infosight.hpe.com.

To register for HPE InfoSight, click the Create Account link on the main page.

Email: [email protected]

For all other general support contact information, go to https://www.nimblestorage.com/customer-support/.

Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

Legal Notices

Contents

HPE Nimble Storage Linux Toolkit (NLT)...................................................6

Prerequisites.........................................................................................................................................6

NORADATAMGR Prerequisites................................................................................................6

NCM Prerequisites.....................................................................................................................7

Docker Prerequisites.................................................................................................................7

NLT Backup Service Prerequisites............................................................................................8

Nimbletune Prerequisites...........................................................................................................8

Kubernetes Prerequisites..........................................................................................................8

Download the NLT Installation Package..............................................................................................9

Install NLT Using the GUI.....................................................................................................................9

NLT Commands.................................................................................................................................10

HPE Nimble Storage Oracle Application Data Manager.........................12

The noradatamgr.conf File.................................................................................................................12

ASM_DISKSTRING Settings...................................................................................................13

Accessing NORADATAMGR..............................................................................................................13

NORADATAMGR Workflows..............................................................................................................14

Describe an Instance...............................................................................................................15

Describe a Diskgroup..............................................................................................................16

Enable NPM for an instance....................................................................................................17

Disable NPM for an instance...................................................................................................18

Edit an Instance.......................................................................................................................18

Take a Snapshot of an Instance..............................................................................................19

List and Filter Snapshots of an Instance.................................................................................21

List Pfile and Control File.........................................................................................................23

Clone an Instance....................................................................................................................24

Delete a Snapshot of an Instance...........................................................................................29

Destroy a Cloned Instance......................................................................................................30

Destroy an ASM Diskgroup.....................................................................................................30

Overview of Using RMAN with NORADATAMGR .............................................................................31

Add a NORADATAMGR Backup to an RMAN Recovery Catalog...........................................33

Clean Up Cataloged Diskgroups and Volumes Used With RMAN..........................................34

RAC Database Recovery........................................................................................................36

Oracle NPM and NLT Backup Service...............................................................................................38

NPM Commands.....................................................................................................................38

Troubleshooting NORADATAMGR....................................................................................................39

Log Files..................................................................................................................................39

SQL Plus..................................................................................................................................39

Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Information for a noradatamgr Issue.......................................................................................39

ASM Disks Offline When Controller Failure Occurs................................................................40

noradatamgr Command Fails after NLT Installation................................................................40

Snapshot and Clone Workflows Fail if spfile not Specified......................................................40

Failover causes ASM to mark disks offline or Oracle database fails.......................................41

Bringing Up the RAC Nodes After an RMAN Recovery Operation..........................................41

HPE Nimble Storage Docker Volume Plugin............................................42

Docker Swarm and SwarmKit Considerations...................................................................................42

The docker-driver.conf File.................................................................................................................43

Docker Volume Workflows.................................................................................................................46

Create a Docker Volume.........................................................................................................48

Clone a Docker Volume...........................................................................................................49

Provisioning Docker Volumes..................................................................................................49

Import a Volume to Docker......................................................................................................50

Import a Volume Snapshot to Docker......................................................................................50

Restore an Offline Docker Volume with Specified Snapshot ..................................................51

Create a Docker Volume using the HPE Nimble Storage Local Driver...................................51

List Volumes............................................................................................................................52

Run a Container Using a Docker Volume................................................................................52

Remove a Docker Volume.......................................................................................................53

HPE Nimble Storage Connection Manager (NCM) for Linux..................54

Configuration......................................................................................................................................54

DM Multipath Configuration.....................................................................................................54

Considerations for Using NCM in Scale-out Mode..................................................................54

LVM Filter for NCM in Scale-Out Mode...................................................................................54

iSCSI and FC Configuration....................................................................................................55

Block Device Settings..............................................................................................................55

Discover Devices for iSCSI......................................................................................................55

Discover Devices for Fibre Channel........................................................................................56

List Devices.............................................................................................................................57

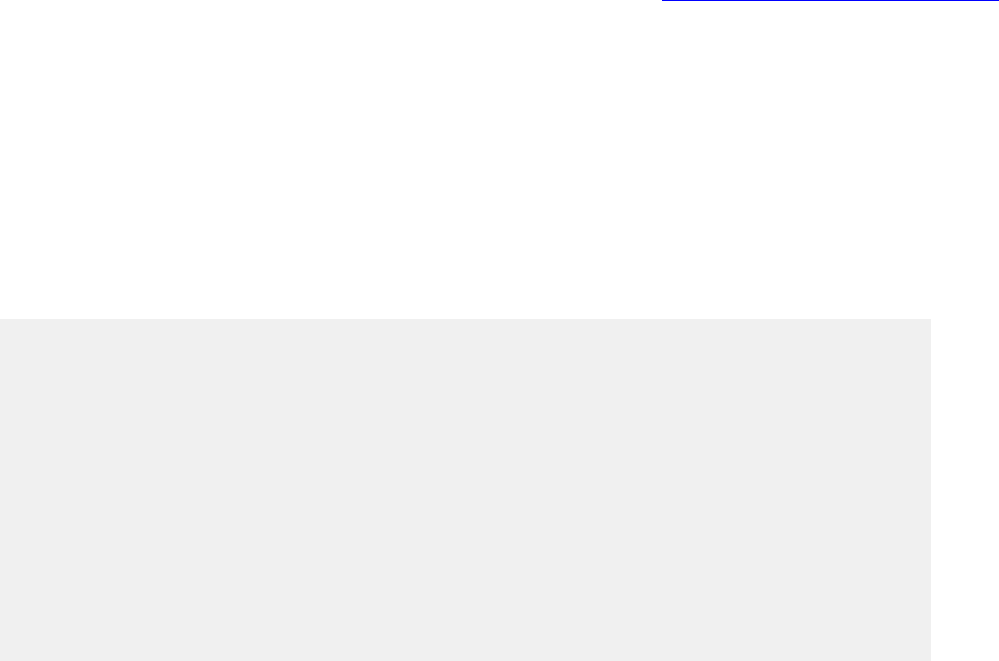

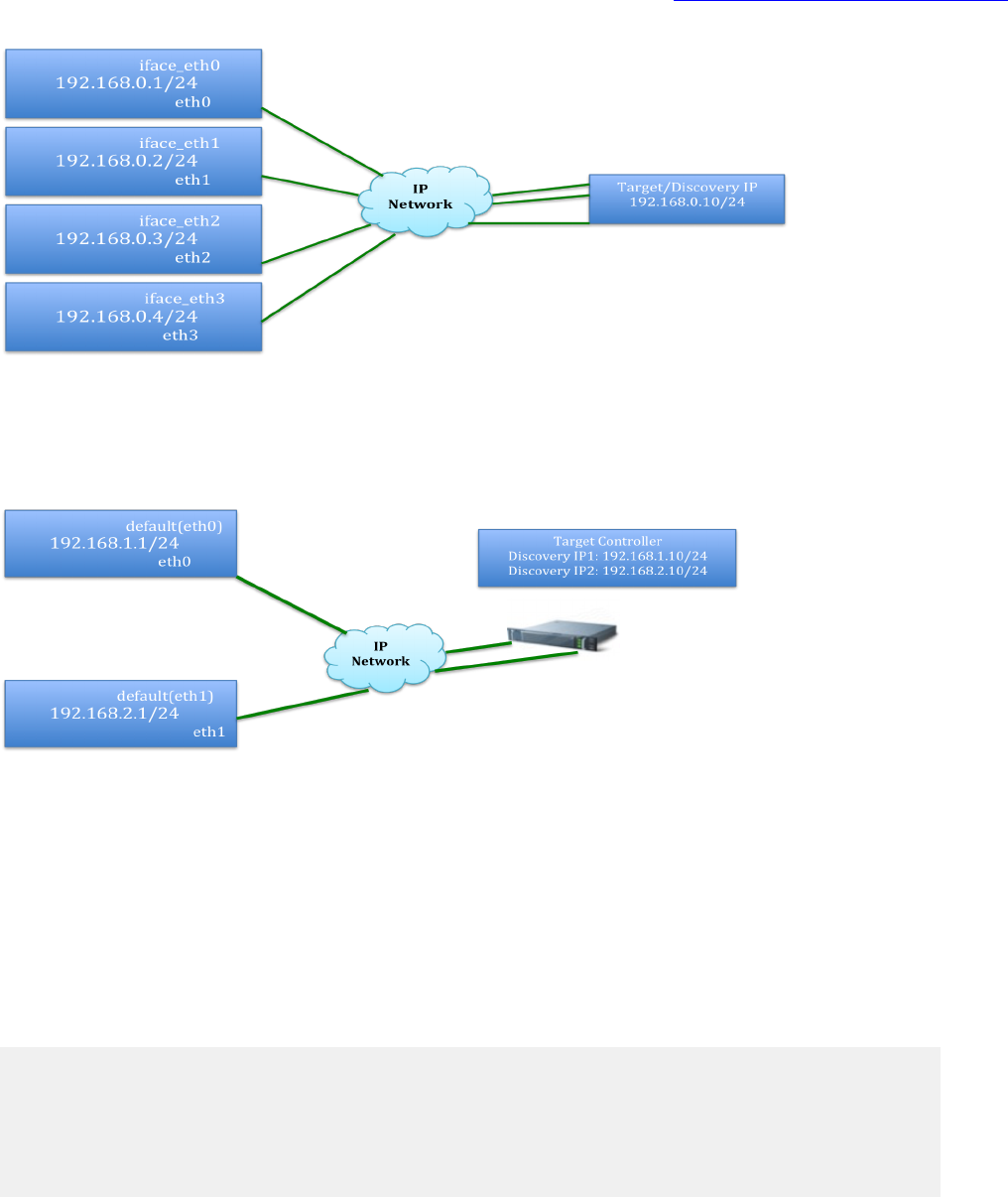

iSCSI Topology Scenarios.......................................................................................................57

iSCSI Connection Handling.....................................................................................................58

SAN Boot Device Support.......................................................................................................59

Create and Mount Filesystems................................................................................................59

Major Kernel Upgrades and NCM...........................................................................................63

Known Issues and Troubleshooting Tips...........................................................................................64

Information for a Linux Issue...................................................................................................64

Old iSCSI Targets IQNs Still Seen after Volume Rename on the Array..................................64

iSCSI_TCP_CONN_CLOSE Errors.........................................................................................65

rescan-scsi-bus.sh Hangs or Takes a Long Time While Scanning Standby Paths.................65

Long Boot Time With a Large Number of FC Volumes...........................................................65

Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Duplicate Devices Found Warning..........................................................................................65

Newly Added iSCSI Targets Not Discovered..........................................................................66

System Enters Maintenance Mode on Host Reboot with iSCSI Volumes Mounted................66

hung_tasks_timeout Errors......................................................................................................66

RHEL 7.x: FC System Fails to Boot........................................................................................67

RHEL 7.x: Fibre Channel Active Paths are not Discovered Promptly.....................................67

During Controller Failover, multipathd Failure Messages Repeat...........................................67

LVM Devices Missing from Output..........................................................................................67

Various LVM and iSCSI Commands Hang on /dev/mapper/mpath Devices...........................67

Devices Not Discovered by Multipath......................................................................................68

System Drops into Emergency Mode......................................................................................68

NLT Installer by Default Disables dm-switch Devices on Upgrades........................................68

NCM with RHEL HA Configuration.....................................................................................................68

The reservation_key Parameter..............................................................................................69

Volume Move Limitations in HA Environments........................................................................69

multipath.conf Settings.......................................................................................................................70

HPE Nimble Storage Kubernetes FlexVolume Plugin and Kubernetes

Storage Controller..................................................................................71

Supported Plugins..............................................................................................................................71

Known Issues.....................................................................................................................................71

Installing the Kubernetes Storage Controller......................................................................................71

Configuring the Kubelet......................................................................................................................72

Kubernetes Storage Controller Workflows.........................................................................................73

Creating a Storage Class........................................................................................................73

Create a Persistent Volume.....................................................................................................77

Delete a Persistent Volume.....................................................................................................78

Nimbletune..................................................................................................79

Nimbletune Commands......................................................................................................................79

Change Nimbletune Recommendations.............................................................................................84

Block Reclamation.....................................................................................85

Reclaiming Space on Linux................................................................................................................86

Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

HPE Nimble Storage Linux Toolkit (NLT)

This document describes the HPE Nimble Storage Linux Toolkit version (NLT) and its services. The NLT can

install the following services:

• HPE Nimble Storage Connection Manager (NCM)

• HPE Nimble Storage Oracle Application Data Manager (NORADATAMGR)

• HPE Nimble Storage Docker Volume plugin

• HPE Nimble Storage Kubernetes FlexVolume Plugin and Kubernetes Storage Controller

• HPE Nimble Storage Backup Service

• HPE Nimble Host Tuning Utility

• The Config Collector Service

To use NLT and its services, the following knowledge is assumed:

• Basic Linux system administration skills

• Basic iSCSI SAN knowledge

• Basic Docker knowledge (to use Docker Volume plug-in)

• Oracle ASM and database technology (to use NORADATAMGR)

Note NLT collects and sends host-based information to the array, once daily per array, where the information

is archived for use by InfoSight predictive analytics. No proprietary data or personal information is collected.

Only basic information about the host is collected, such as the hostname, host OS, whether it is running as

a VM or in machine mode, and the MPIO configuration.

Prerequisites

To use HPE Nimble Storage Linux Toolkit (NLT) and its components, you must have administrator access

and a management connection from the host to the array. In addition, the following system requirements must

be met:

Hardware requirements

• 1GB of RAM or more

• 500MB to 1GB free disk space on the /root partition or on the /opt/ partition if mounted separately

• Hostname set other than “localhost” if Application Data Manager or Docker Volume Plugin are used

Software requirements vary depending upon what NLT features are installed. Refer to the following topics

for more information:

•

Prerequisites and Support on page 7 for HPE Nimble Storage Connection Manager

•

NORADATAMGR Prerequisites on page 6 for HPE Nimble Storage Oracle Application Data Manager

•

Docker Prerequisites on page 7 for HPE Nimble Storage Docker Volume Plugin

•

Kubernetes Prerequisites on page 8for HPE Nimble Storage Kubernetes FlexVolume Plugin and Dynamic

Provisioner

•

NLT Backup Service Prerequisites on page 8 for the NLT Backup Service

•

Nimbletune Prerequisites on page 8 for the Nimbletune utility

NORADATAMGR Prerequisites

To use NORADATAMGR and its components, the following prerequisites must be met.

Software requirements:

• NimbleOS 3.5 or higher

6Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

HPE Nimble Storage Linux Toolkit (NLT)

• Oracle 11.2.x.x and Oracle 12cR1 (12.1.x.x)

• Oracle Automatic Storage Management (ASM) or ASMlib

• RHEL 6.5 and above

• RHEL 7.1 and above

• Oracle Linux 6.5 and above

• CentOS 6.5 and above

Note In RHEL/CentOS/Oracle Linux 7.3 and 7.4, the Clone a Database workflow fails due to Oracle bug

25638411. This bug has been fixed as part of Patch 19544733. Oracle patch "Patch 19544733: STRICT

BEHAVIOR OF LINKER IN 4.8.2 VERSION OF GCC-BINUTILS" has to be applied to the GRID_HOME to

be able to run the clone workflow on RHEL/CentOS/Oracle Linux 7.3 or 7.4. Refer to the HPE Nimble Storage

Linux Toolkit Release Notes for more information.

Partition requirement:

• Use whole multipath Linux disks for ASMLib disks/Oracle ASM diskgroups.

• Do not create partitions on multipath Linux disks that will make up the ASMLib disks/Oracle ASM diskgroups.

NCM Prerequisites

Prerequisites and Support

The NimbleOS and host OS prerequisites for Linux NCM are:

• NimbleOS 2.3.12 or higher

• RHEL 6.5 and above

• RHEL 7.1 and above

• CentOS 6.5 and above

• CentOS 7.1 and above

• Oracle Linux 6.5 and above

• Oracle Linux 7.1 and above

• Ubuntu LTS 14.04

• Ubuntu LTS 16.04

• Ubuntu LTS 18.04

Linux NCM is supported on the following protocols:

• iSCSI

• Fibre Channel

Host Prerequisites

Before you begin, ensure these packages are installed on the host.

• sg3_utils and sg3_utils-libs

• device-mapper-multipath

• iscsi-initiator-utils (for iSCSI deployments)

Port Requirement

Default REST port 5392 must not be blocked by firewalls or host IP tables.

Docker Prerequisites

To use Docker, the following prerequisites must be met.

Software requirements:

• NimbleOS 3.4 or higher

• CentOS 7.2 and above

7Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

NCM Prerequisites

• RHEL 7.2 and above

• Ubuntu LTS 14.04

• Ubuntu LTS 16.04

• Docker 1.12.1 or later to use either as a standalone engine or with SwarmKit

• Docker 1.12.1-cs or later to use either as a standalone CS engine or with UCP

On RedHat and CentOS hosts, use root access from a terminal window and enter the following command:

[root@ ~]# yum install -y device-mapper-multipath iscsi-initiator-utils sg3_utils

On Ubuntu hosts, use root access from a terminal window and enter the following commands:

[root@ ~]# apt-get update

[root@ ~]# apt-get install -y multipath-tools open-iscsi sg3-utils xfsprogs

Follow the steps documented on the Docker website to install the latest Docker Engine on your Linux host

https://docs.docker.com/engine/installation/.

NLT Backup Service Prerequisites

The NimbleOS and host OS prerequisites for NLT Backup Service are:

• NimbleOS 5.0.x or higher

• For replication, the downstream group must be running NimbleOS 4.x or higher

Nimbletune Prerequisites

Nimbletune is supported on any of the following host operating systems:

• RHEL 6.5 and above

• RHEL 7.1 and above

• CentOS 6.5 and above

• CentOS 7.1 and above

• Oracle Linux 6.5 and above

• Oracle Linux 7.1 and above

• Ubuntu LTS OS 14.04

• Ubuntu LTS OS 16.04

• Ubuntu LTS OS 18.04

Kubernetes Prerequisites

To use Kubernetes, the following prerequisites must be met.

Software requirements:

• NimbleOS 5.0.2 or higher

• CentOS 7.2 and above

• RHEL 7.2 and above

• Ubuntu LTS 14.04

• Ubuntu LTS 16.04

• Docker 1.13 or later

• Internet connectivity

Follow the steps documented on the Kubernetes website to download and install Kubernetes on various

platforms https://kubernetes.io/docs/setup/.

8Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

NLT Backup Service Prerequisites

Download the NLT Installation Package

There are two ways to install HPE Nimble Linux Tollkit, using the GUI or using the CLI.

Note The Application Data Manager and Docker Volume plugins are mutually exclusive.

Procedure

Choose one of the following methods to download the installation package.

DescriptionOption

Download

using

the GUI

1

Download the NLT installation package from one of the following two locations:

• If you have an InfoSight account, you can download the NLT installer from

https://update.nimblestorage.com/NLT/2.4.1/nlt_installer_2.4.1.13.

• If you do not have an InfoSight account, you can download the NLT installer from

https://infosight.hpe.com/InfoSight/media/software/active/public/17/211/Anonymous_NLT_241-13_Download_Form.html

2

Click Resources > Software Downloads.

3

In the Integration Kits pane, click Linux Toolkit (NLT).

4

From the Linux Toolkit (NLT) page, click NLT Installer under Current Version.

When prompted, save the installer to your local host.

Note The HPE Nimble Storage Linux Toolkit Release Notes for the package are also available from this page.

For unattended non-interactive installation, use any of the following methods to download NLT:

curl -o nlt_installer_2.4.1.13

Download

using

the CLI

https://infosight.hpe.com/InfoSight/media/software/active/public/17/211/nlt_installer_2.4.1.13?accepted_eula=yes&confirm=Scripted

wget -O nlt_installer_2.4.1.13

https://infosight.hpe.com/InfoSight/media/software/active/public/17/211/nlt_installer_2.4.1.13?accepted_eula=yes&confirm=Scripted

What to do next

Complete the steps in Install NLT Using the GUI on page 9.

Install NLT Using the GUI

The NLT installer detects your host information and prompts you to install the components that are supported

in your environment.

Before you begin

You must have the installation package stored locally on your host.

Procedure

1

Make sure the file is executable and run the installer as root:.

/path/to/download/nlt_installer_2.3.0

Note For automated installations, you can use the --silent-mode option. Use the --help option to see

more installation options.

2

Accept the EULA.

3

Enter yes to continue with the NLT installation.

4

If prompted, enter yes to install the Connection Manager (NCM).

9Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

Download the NLT Installation Package

5

If installing NCM, choose one of the following: enter yes if scale-out (multi-array group) mode is required

with connection manager.

• Enter no if there is only a single array in the group.

• Enter yes only if you have a multi-array group connected to the host.

Note Refer to Considerations for Using NCM in Scale-out Mode on page 54 for more information.

6

If prompted, enter yes to install the Oracle plugin.

Note You are only prompted if your environment supports the NORADATAMGR.

7

If prompted, enter yes to install the Docker plugin.

Note You are only prompted if your environment supports the Docker Volume plugin.

8

If prompted, enter yes to install the Kubernetes FlexVolume plugin.

Note You are only prompted if you chose to install the Docker Volume plugin. Docker is required to use

the Kubernetes FlexVolume Plugin and the Kubernetes Storage Controller. For instructions on installing

the Kubernetes Storage Controller, see HPE Nimble Storage Kubernetes FlexVolume Plugin and Kubernetes

Storage Controller on page 71.

9

Enter yes to enable iSCSI services.

10

Verify the status of the plugins you installed.

nltadm --status

11

Add an array group to the NLT to enable the plugins to provision volumes.

nltadm --group --add --ip-address hostname or management IP address --username

username--password password

Note We recommend using the IP address as the API endpoint to avoid any unrelated connectivity issues

with NLT.

12

Verify management communication between the host and the array.

nltadm --group --verify --ip-address management IP address

NLT Commands

Use these commands to manage the NLT services (NCM, NORADATAMGR, and Docker).

nltadm --help

usage:

nltadm [--start <ncm|oracle|docker|collector|backup-service> ]

nltadm [--stop <ncm|oracle|docker|collector|backup-service> ]

nltadm [--enable <oracle|docker|collector|backup-service> ]

nltadm [--disable <oracle|docker|collector|backup-service> ]

nltadm [--status ]

nltadm [--config {--dry-run} {--format <xml|json>}]

nltadm [--version ]

nltadm [--diag {CASE NUMBER} ]

nltadm [--group --add --ip-address <GROUP MANAGEMEMT IP> --username

<GROUP USERNAME> {--password <GROUP PASSWORD> } ]

nltadm [--group --remove --ip-address <GROUP MANAGEMENT IP> ]

nltadm [--group --verify --ip-address <GROUP MANAGEMENT IP> ]

nltadm [--group --list ]

nltadm [--help ]

--add Add a Nimble Group

--config Send host configuration data to the

10Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

NLT Commands

array.

--diag <CASE NUMBER> Collect diagnostic dump for NLT

components

--disable <SERVICE NAME> Disable a service

--dry-run Dump host configuration information

without sending to array.

--enable <SERVICE NAME> Enable a service

--format <OUTPUT FORMAT> Output format for host configuration

information. Should be only used with --dry-run option

--group Nimble Group commands

-h,--help Prints this help message

--ip-address <GROUP MANAGEMENT IP> Nimble Group management IP address

--list List Nimble Groups along with

connection status

--password <GROUP PASSWORD> Nimble Group password

--remove Remove a Nimble group

--start <SERVICE NAME> Start a service

--status Display the status of each service

--stop <SERVICE NAME> Stop a service

--username <GROUP USERNAME> Nimble Group username

-v,--version Display the version of Nimble Linux

Toolkit

--verify Verify connectivity to a Nimble Group

11Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

HPE Nimble Storage Linux Toolkit (NLT)

HPE Nimble Storage Oracle Application Data Manager

The HPE Nimble Storage Oracle Application Data Manager enables you to take snapshots for backups and

recovery points and to create clones of both datastores and volumes. NORADATAMGR simplifies the backup

and cloning processes so that you do not need to understand the mapping between your database and the

objects in the array.

NORADATAMGR, which uses a command line interface, is part of the HPE Nimble Storage Linux Toolkit

(NLT) and works with Oracle Automatic Storage Management (ASM).

When you use NLT 2.4.0 or later, NORADATAMGR also supports Oracle Recovery Manager (RMAN). The

NORADATAMGR catalog-backup command makes HPE Nimble Storage snapshot data available to RMAN

for restore operations. This command is faster than actually copying the data and allows you use a snapshot

of an HPE Nimble Storage database instance for restore operations.

You can use role-based access control (RBAC) with NORADATAMGR. RBAC is provided at the host level.

You maintain a list of hosts that are allowed to access an instance.

The supported Oracle workflows can be performed on both local and remote hosts.

Note NORADATAMGR on a single host can connect to only one array group. Multiple hosts with instances

having the same SID connecting to the same group are not supported.

To use NORADATAMGR you must be running Oracle 11gR2 or later. For NLT V3.0, Oracle 12c is supported

as well. In addition, Oracle ASM must be installed on a system running Red Hat Enterprise Linux 6.5 or higher.

The HPE Nimble Storage Validated Configuration Matrix tool, which is online at

https://infosight.hpe.com/resources/nimble/validated-configuration-matrix, contains information about

requirements for using NLT with Oracle.

Note

For NORADATAMGR to work with ASMLIB, you must verify or adjust the Disc Scan Ordering in Oracle ASM.

To adjust the Disc Scan Ordering, ensure that Oracle ASMlib has the following parameters set in the

/etc/sysconfig/oracleasm file:

• 'ORACLEASM_SCANORDER' parameter is set to "dm- mpath".

• 'ORACLEASM_SCANEXCLUDE' parameter is set to "sd"

The noradatamgr.conf File

Below is the default noradatamgr.conf. file with explanations of the parameters. When setting the user and

group, ensure that the user is a valid OS user and that both the group and the user have sysdba privileges.

If the specified user does not have the correct sysdba privileges, NORADATAMGR operations will fail.

Changes to noradatamgr.conf require a NLT service restart to take effect.

/opt/NimbleStorage/etc/noradatamgr.conf

#Oracle user and group, and db user

orcl.user.name=oracle

orcl.user.group=oinstall

orcl.db.user=sysdba

# Maximum time in seconds that the Nimble Oracle App Data Manager will wait

for a database to get into and out of hotbackup mode.

orcl.hotBackup.start.timeout=14400

orcl.hotBackup.end.timeout=15

# Snapshot name prefix if a snapshot needs to be taken before cloning an

12Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

HPE Nimble Storage Oracle Application Data Manager

instance

nos.clone.snapshot.prefix=BaseFor

# NPM parameter for enabling hot-backup mode for all instances on the host

during scheduled snapshots

npm.hotbackup.enable=false;

# NPM parameter for enabling hot-backup mode for all instances on the host

during scheduled snapshots

npm.hotbackup.enable=false;

ASM_DISKSTRING Settings

HPE Nimble Storage recommends the following options for the ASM_DISKSTRING setting when you use

HPE Nimble Storage Oracle Application Data Manager. This ensures proper discovery of devices by ASM

for building ASM disk groups.

On udev systems, ASM_DISKSTRING should be set to /dev/mapper.

On asmlib systems, ASM_DISKSTRING should either be blank to default to ORCL: glob style disk paths, or

set to /dev/oracleasm/disks.

Accessing NORADATAMGR

Use the following instructions to allow non-root users to execute noradatamgr.

To allow anyone in the DBA group to execute noradatamgr:

chgrp dba /opt/NimbleStorage/etc

chmod g+rx /opt/NimbleStorage/etc

chgrp dba /opt/NimbleStorage/etc/client

chmod g+rx /opt/NimbleStorage/etc/client

chgrp dba /opt/NimbleStorage/bin

chmod g+x /opt/NimbleStorage/bin

chgrp dba /opt/NimbleStorage/etc/nora*

chmod g+r /opt/NimbleStorage/etc/nora*

chgrp dba /usr/bin/noradatamgr

chmod g+x /usr/bin/noradatamgr

chgrp dba /opt/NimbleStorage/etc/client/log4j.properties

chmod g+x /opt/NimbleStorage/etc/client/log4j.properties

To allow the Oracle user to execute noradatamgr:

chown oracle /opt/NimbleStorage/etc

chmod u+rx /opt/NimbleStorage/etc

chown oracle /opt/NimbleStorage/etc/client

chmod u+rx /opt/NimbleStorage/etc/client

chown oracle /opt/NimbleStorage/bin

chmod u+x /opt/NimbleStorage/bin

chown oracle /opt/NimbleStorage/etc/nora*

chmod u+r /opt/NimbleStorage/etc/nora*

chown oracle /usr/bin/noradatamgr

chmod u+x /usr/bin/noradatamgr

chown oracle /opt/NimbleStorage/etc/client/log4j.properties

chmod u+x /opt/NimbleStorage/etc/client/log4j.properties

13Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

ASM_DISKSTRING Settings

NORADATAMGR Workflows

The HPE Nimble Storage Oracle Application Data Manager (NORADATAMGR) uses the following command

options in the supported NORADATAMGR workflows:

[root]# noradatamgr --help

usage:

noradatamgr [--describe [--instance {SID}] [--diskgroup {DISKGROUP NAME}]

noradatamgr [--edit --instance {SID}

--allow-hosts {HOSTNAME1,HOSTNAME2... | ALL} ]

noradatamgr [--snapshot --instance {SID} --snapname {SNAPSHOT NAME}

[--hot-backup]

[--replicate] ]

noradatamgr [--clone --instance {SID} --clone-name {DB NAME}

[--snapname {SNAPSHOT NAME}] [--clone-sid {SID}]

[--clone-home {ORACLE HOME}] [--override-pfile

{ABSOLUTE PATH TO PFILE}]

[--folder {FOLDER NAME}] [--diskgroups-only] ]

noradatamgr [--list-snapshots --instance {SID} [--verbose] [--npm] [--cli]

noradatamgr [--delete-snapshot --instance {SID} --snapname {SNAPSHOT NAME}

[--delete-replica] ]

noradatamgr [--get-pfile --instance {SID} --snapname {SNAPSHOT NAME} ]

noradatamgr [--get-cfile --instance {SID} --snapname {SNAPSHOT NAME} ]

noradatamgr [--destroy [--instance {SID}] [--diskgroup {DISKGROUP NAME}]

[--silent-mode] ]

noradatamgr [--enable-npm --instance {SID}]

noradatamgr [--disable-npm --instance {SID}]

noradatamgr [--catalog-backup --instance {TARGET_DB_SID}

--snapname {SNAPSHOT_TO_RECOVER_FROM}

--diskgroup-prefix {PREFIX_FOR_NAMING_CLONED_DISKGROUPS}

--rcat {NET_SERVICE_NAME_FOR_RECOVERY_CATALOG}

--rcat-user {RECOVERY_CATALOG_USER}

noradatamgr [--uncatalog-backup --instance {TARGET_DB_SID}

--rcat-user {RECOVERY_CATALOG_USER}

[--rcat-passwd {PASSWORD_FOR_RCAT_USER}]

noradatamgr [--help]

Nimble Storage Oracle App Data Manager

--allow-hosts <HOSTNAME1[, HOSTNAME2 ...]> Comma separated list of

hostnames that a database instance can be cloned to.

-c,--clone Clone a database instance.

--cli Filter only NORADATAMGR CLI

triggered snapshots.

--clone-home <ORACLE HOME> ORACLE HOME of the cloned

instance.

--clone-name <DB NAME> Database name of the cloned

instance.

--clone-sid <INSTANCE SID> ORACLE SID of the cloned

instance.

-d,--describe Describe the storage for a

database instance or an ASM diskgroup.

--delete-replica Delete the snapshot from

remote replication group as well.

--destroy Destroy an ASM diskgroup.

--disable-npm Disable scheduled snapshots

for the given instance.

--diskgroup <DIKSGROUP NAME> ASM diskgroup name.

--diskgroups-only Only clone the diskgroups of

the specified instance, do not clone the instance itself.

-e,--edit Edit the storage properties

for a database instance.

14Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

NORADATAMGR Workflows

--enable-npm Configure the volume collection

of the instance to enable scheduled snapshots.

--folder <FOLDER> Name of the folder in which

cloned volumes must reside.

-h,--help Display this help and exit.

--hot-backup Indicates the database must

be put in hot backup mode before a snapshot backup is taken.

--instance <ORACLE SID> Database Instance SID.

-l,--list-snapshots List the snapshot backups of

a database instance.

--no-rollback Do not rollback cloned disks

and diskgroups on failure of the clone workflow.

--npm Filter only NPM schedule

triggered snapshots.

--override-pfile <OVERRIDE PFILE> Absolute path to file

containing override values for pfile properties of a cloned instance.

-p,--get-pfile Print contents of the pfile

from a snapshot backup.

-r,--delete-snapshot Destroy the snapshot backup

for a database instance.

--replicate Replicate the snapshot.

-s,--snapshot Create an application

consistent or crash consistent snapshot backup of a database instance.

--silent-mode Initiate workflow in a

non-interactive/silent mode without user intervention.

--snapname <SNAPSHOT NAME> Name of the snapshot backup.

--verbose Verbose option.

-x,--get-cfile Retrieve the control file

from a snapshot backup.

Describe an Instance

You can obtain information about the storage footprint of an instance using the describe --instance command.

Instances must be local, live database instances backed by ASM diskgroups that contain disks backed by

volumes. The information you see in these cases includes the following:

• Diskgroup names

• Disk and device names

• HPE Nimble Storage volume names and sizes

• Hosts allowed to access the instance to perform operations such as cloning, or listing snapshots

If the instance is down, or not backed by diskgroups, or if the diskgroups do not contain disks backed by HPE

Nimble Storage volumes, you see messages describing the following conditions:

• Instances that are down or not backed by ASM diskgroups

• Diskgroups that do not contain any HPE Nimble Storage disks (disks backed by HPE Nimble Storage

volumes)

If Oracle database instance disk groups are backed by an HPE Nimble Storage array with NimOS 5.x or later,

the NPM status is displayed at the bottom of the describe output.

Procedure

Describe an instance.

noradatamgr --describe --instance instance_name

15Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

Describe an Instance

Example

[root]# noradatamgr --describe --instance rac1db1

Diskgroup: FRADG

Disk: /dev/oracleasm/disks/FRA2

Device: dm-7

Volume: rac-scan1-fra2

Serial number: 30ee5255564250406c9ce9002c92b39c

Size: 120GB

Disk: /dev/oracleasm/disks/FRA1

Device: dm-5

Volume: rac-scan1-fra1

Serial number: a37f89039236cb1f6c9ce9002c92b39c

Size: 120GB

Diskgroup: DATADG

Disk: /dev/oracleasm/disks/DATA1

Device: dm-4

Volume: rac-scan1-data1

Serial number: e7b4816607f7b0146c9ce9002c92b39c

Size: 100GB

Disk: /dev/oracleasm/disks/DATA2

Device: dm-6

Volume: rac-scan1-data2

Serial number: 74278282d50260816c9ce9002c92b39c

Size: 100GB

Allowed hosts: ALL

NPM enabled: No

Describe a Diskgroup

You can obtain information about the storage footprint of any ASM diskgroup on a host using the describe

--diskgroup command. The information you see in these cases includes the following:

• Disk and device names

• Volume names and sizes for all the disks in the diskgroup

• Diskgroups that do not contain any HPE Nimble Storage disks (disks backed by HPE Nimble Storage

volumes)

If the instance is down, or not backed by diskgroups, or if the diskgroups do not contain disks backed by HPE

Nimble Storage volumes, you see messages describing the following condition:

• Diskgroups that do not contain any HPE Nimble Storage disks (disks backed by HPE Nimble Storage

volumes)

Procedure

Describe a diskgroup.

noradatamgr --describe --diskgroup diskgroup_name

Example

[root]# noradatamgr --describe --diskgroup DATADG

DATADG

Disk: /dev/oracleasm/disks/DATA1

Device: dm-0

Volume: rh68db-data1

16Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

Describe a Diskgroup

Serial number: c0505f3bfe7fc7fe6c9ce900f4f8b199

Size: 100GB

Disk: /dev/oracleasm/disks/DATA2

Device: dm-1

Volume: rh68db-data2

Serial number: 336a1fae8363f4956c9ce900f4f8b199

Size: 100GB

Enable NPM for an instance

This command configures the volume collection and adds the application specific metadata to the volume

collection and the volumes.

Before you begin

You must be on NimbleOS 5.0.x and higher.

Procedure

Enable the NPM for an instance.

noradatamgr --enable-npm --instance SID

Example

Enable NPM for an instance

[root]# noradatamgr --instance xyz123 --enable-npm

Success: Scheduled snapshots have been enabled for instance xyz123.

Please create the required schedule on volume collection rac-scan1 to

start the Oracle scheduled snapshots.

[root]# noradatamgr --instance xyz123 --describe

Diskgroup: REDODG

Disk: /dev/oracleasm/disks/REDO1

Device: dm-1

Volume: rac-scan1-redo1

Serial number: 4556477f760e919f6c9ce9002c92b39c

Size: 30GB

Diskgroup: FRADG

Disk: /dev/oracleasm/disks/FRA1

Device: dm-5

Volume: rac-scan1-fra1

Serial number: a37f89039236cb1f6c9ce9002c92b39c

Size: 120GB

Diskgroup: DATADG

Disk: /dev/oracleasm/disks/DATA1

Device: dm-4

Volume: rac-scan1-data1

Serial number: e7b4816607f7b0146c9ce9002c92b39c

Size: 100GB

Allowed hosts: ALL

NPM enabled: Yes

17Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

Enable NPM for an instance

Disable NPM for an instance

Before you begin

You must be on NimbleOS 5.0.x and higher.

Procedure

Disable NPM for an instance.

noradatamgr --disable-npm --instance SID

Example

Disable NPM for an instance

[root]# noradatamgr --instance xyz123 --disable-npm

Success: Scheduled snapshots have been disabled for instance xyz123.

Please delete the schedules on volume collection rac-scan1.

[root]# noradatamgr --instance xyz123 --describe

Diskgroup: REDODG

Disk: /dev/oracleasm/disks/REDO1

Device: dm-1

Volume: rac-scan1-redo1

Serial number: 4556477f760e919f6c9ce9002c92b39c

Size: 30GB

Diskgroup: FRADG

Disk: /dev/oracleasm/disks/FRA1

Device: dm-5

Volume: rac-scan1-fra1

Serial number: a37f89039236cb1f6c9ce9002c92b39c

Size: 120GB

Diskgroup: DATADG

Disk: /dev/oracleasm/disks/DATA1

Device: dm-4

Volume: rac-scan1-data1

Serial number: e7b4816607f7b0146c9ce9002c92b39c

Size: 100GB

Allowed hosts: ALL

NPM enabled: No

What to do next

Note If NLT is uninstalled or group information is removed from NLT, then NPM will automatically be disabled

for all database instances.

Edit an Instance

You can edit a local live instance to modify the list of hosts that are allowed to perform cloning and snapshot

listing operations on that instance.

If some or all of the data, and online redo volumes of the instance are not in a volume collection when you

run the edit command, a volume collection is created automatically. Data or online log volumes cannot be

part of two different volume collections.

The --allow-hosts command is used to place access control on a local live instance at a host level, by

specifying the host names which are allowed to access the instance. The following guidelines apply:

18Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

Disable NPM for an instance

• You can use a comma-separated list of host names, or "all" to allow all hosts to access the instance. When

listing the host names, you must list the name as it is specified when running the hostname command

on the host to be added.

• The name of the host on which the edit workflow is run, will always be added to the list of allowed hosts.

• No host name validation is performed. You must enter a valid host name to ensure that operations (such

as listing snaps and clones) are successful.

• If you change a host name for any reason, you must run the edit workflow again using the updated list of

host names.

• If you want to add a new host to the list, you must run the edit workflow again listing all the host names

to be added, including the new host name.

Procedure

Edit an instance.

noradatamgr --edit --instance instance_name --allow-hosts hosts_list

Example

[root]# noradatamgr --edit --instance rh68db --allow-hosts

host-rhel1,host-rhel2

Allowed hosts set to: host-rhel1,host-rhel2

Success: Storage properties for database instance rh68db updated.

[root@host-rhel1 ~]# noradatamgr --describe --instance rh68db

Diskgroup: ARCHDG

Disk: /dev/oracleasm/disks/ARCH1

Device: dm-2

Volume: rh68db-arch1

Serial number: 4dc46ccf29cb4a216c9ce900f4f8b199

Size: 100GB

Diskgroup: REDODG

Disk: /dev/oracleasm/disks/REDO1

Device: dm-3

Volume: rh68db-redo1

Serial number: 62271db7e2578d7e6c9ce900f4f8b199

Size: 50GB

Diskgroup: DATADG

Disk: /dev/oracleasm/disks/DATA1

Device: dm-0

Volume: rh68db-data1

Serial number: c0505f3bfe7fc7fe6c9ce900f4f8b199

Size: 100GB

Disk: /dev/oracleasm/disks/DATA2

Device: dm-1

Volume: rh68db-data2

Serial number: 336a1fae8363f4956c9ce900f4f8b199

Size: 100GB

Allowed hosts: host-rhel1,host-rhel2

Take a Snapshot of an Instance

You can use the NORADATAMGR to take a snapshot of a local live instance from both a standalone an

Oracle Real Application Clusters (RAC) setup.

Note Before specifying that the snapshot be replicated, ensure that replication has been set on the array on

the volume collection that contains the data and online redo log volumes of the instance.

For taking a snapshot of a local live instance, the snapshot command places the data and online log volumes

in a single volume collection (which is created if one does not already exist). You can optionally specify that

19Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

Take a Snapshot of an Instance

the instance be placed in hot backup mode before taking the snapshot, or that the snapshot be replicated to

a downstream group. To place the instance in hot backup mode, you must enable archive log mode on the

host.

A snapshot of an Oracle instance taken by NORADATAMGR is a snapshot collection of the volume collection

that contains the data and log volumes backing the instance. This snapshot can be used at a later time to

create a clone of the instance. All snapshots taken by NORADATAMGR are application consistent, and can

provide instance-level recovery on an instance cloned from the snapshot. Point-in-time recovery is not

supported.

A snapshot of any instance in an Oracle RAC cluster is a snapshot collection of the entire RAC database and

contains all the Oracle metadata necessary to recover the database, including the data and redo logs. The

snapshot can also be used to clone the RAC database.

The NORADATAMGR tool mandates that a snapshot of a RAC instance is always taken with hot-backup

mode enabled. The tool will try to get a RAC instance in hot-backup mode even if that option is not specified

while taking a snapshot.

Before you begin

Archive log mode must be enabled on the host before you take a snapshot of a RAC instance. Archive log

mode ensures that the RAC instance can be successfully taken into hot backup mode.

Procedure

Take a snapshot of an instance.

noradatamgr --snapshot --instance instance_name --snapname snapshot_name [--hot-backup]

[--replicate]

Example

Take a Snapshot

[root]# noradatamgr --snapshot --instance rh68db --snapname rh68.snap.603

Success: Snapshot backup rh68.snap.603 completed.

Take a Snapshot and Replicate

[root]# noradatamgr --snapshot --instance rh68db --snapname

rh68db.snap.repl --replicate

Success: Snapshot backup rh68db.snap.repl completed.

[root]# noradatamgr --list-snapshot --instance rh68db --verbose

Snapshot Name: rh68db.snap.repl taken at 16-10-24 14:47:14

Instance: rh68db

Snapshot can be used for cloning rh68db: Yes

Hot backup mode enabled: No

Replication status: complete

Database version: 11.2.0.4.0

Host OS Distribution: redhat

Host OS Version: 6.8

Take a Snapshot in Hot Backup Mode

SQL> archive log list;

Database log mode Archive Mode

Automatic archival Enabled

Archive destination +ARCHDG

Oldest online log sequence 103008

Next log sequence to archive 103010

Current log sequence 103010

20Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

HPE Nimble Storage Oracle Application Data Manager

SQL>

[root]# noradatamgr --snapshot --instance rh68db --snapname

rh68db.snap.hotbackup --hot-backup-mode

Putting instance rh68db in hot backup mode...

Success: Snapshot backup rh68db.snap.hotbackup completed.

Taking instance rh68db out of hot backup mode...

[root@hiqa-sys-rhel1 ~]#

[root]# noradatamgr --list-snapshot --instance rh68db --verbose

Snapshot Name: rh68db.snap.hotbackup taken at 16-10-24 14:55:37

Instance: rh68db

Snapshot can be used for cloning rh68db: Yes

Hot backup mode enabled: Yes

Replication status: N/A

Database version: 11.2.0.4.0

Host OS Distribution: redhat

Host OS Version: 6.8

List and Filter Snapshots of an Instance

You can list snapshots of an instance that were taken using the NORADATAMGR snapshot command or

through a NLT Backup Service schedule. The list can contain snapshots taken from both local and remote

instances (remote instances are those which are not running on the host from which the list-snapshots

command is invoked). Snapshots taken of instances that are shut down are also listed.

You can filter snapshots by those taken only through NPM or only with the CLI.

Note The list-snapshots command will fail if the host does not appear in the list of allowed hosts, or if the

instance does not have the correct storage configuration (data and log volumes of the instance are in one

volume collection) and the required metadata is not tagged to the volume collection.

Procedure

List snapshots of an instance.

noradatamgr –-list-snapshots --instance instance_name {--npm ,--cli }--verbose

Example

List Snapshots

[root]# noradatamgr --list-snapshot --instance rh68db

-----------------------------------+--------------+--------+-------------------

Snap Name Taken at Instance Usable for

cloning

-----------------------------------+--------------+--------+-------------------

rh68.snap.603 16-10-24 12:01 rh68db Yes

rh68db.snap.601 16-10-21 10:36 rh68db Yes

rh68db.snap.600 16-10-20 14:48 rh68db Yes

rh68db.snap.602 16-10-21 15:21 rh68db Yes

List Snapshots (Verbose Mode)

[root]# noradatamgr --list-snapshot --instance rh68db --verbose

Snapshot Name: rh68.snap.603 taken at 16-10-24 12:01:40

Instance: rh68db

Snapshot can be used for cloning rh68db: Yes

Hot backup mode enabled: No

21Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

List and Filter Snapshots of an Instance

Replication status: N/A

Database version: 11.2.0.4.0

Host OS Distribution: redhat

Host OS Version: 6.8

Snapshot Name: rh68db.snap.601 taken at 16-10-21 10:36:20

Instance: rh68db

Snapshot can be used for cloning rh68db: Yes

Hot backup mode enabled: No

Replication status: N/A

Database version: 11.2.0.4.0

Host OS Distribution: redhat

Host OS Version: 6.8

Snapshot Name: rh68db.snap.600 taken at 16-10-20 14:48:27

Instance: rh68db

Snapshot can be used for cloning rh68db: Yes

Hot backup mode enabled: No

Replication status: N/A

Database version: 11.2.0.4.0

Host OS Distribution: redhat

Host OS Version: 6.8

Snapshot Name: rh68db.snap.602 taken at 16-10-21 15:21:44

Instance: rh68db

Snapshot can be used for cloning rh68db: Yes

Hot backup mode enabled: No

Replication status: N/A

Database version: 11.2.0.4.0

Host OS Distribution: redhat

Host OS Version: 6.8

List Snapshots (from Remote Host)

(Note: instance rh68db is running on host-rhel1)

[root@host-rhel2]# noradatamgr --list-snapshots --instance rh68db

-----------------------------------+--------------+--------+-------------------

Snap Name Taken at Instance Usable for

cloning

-----------------------------------+--------------+--------+-------------------

rh68db.hot.repl.102 16-10-24 17:36 rh68db Yes

rh68.snap.603 16-10-24 12:01 rh68db Yes

rh68db.snap.601 16-10-21 10:36 rh68db Yes

rh68db.snap.600 16-10-20 14:48 rh68db Yes

rh68db.snap.repl 16-10-24 14:47 rh68db Yes

rh68db.snap.hotbackup 16-10-24 14:55 rh68db Yes

rh68db.hot.repl.101 16-10-24 17:13 rh68db Yes

List Snapshots With Filters

[root]# noradatamgr --instance xyz123 --list-snapshot --npm

-----------------------------------+---------------+---------+--------+--------

Snap Name Taken at Instance Cloning

Created

possible

using

-----------------------------------+---------------+---------+--------+--------

rac-scan1-30-min-2017-10-10::14... 17-10-10 14:00 rac1db2 Yes

NPM

rac-scan1-30-min-2017-10-10::13... 17-10-10 13:00 rac1db2 Yes

NPM

rac-scan1-30-min-2017-10-10::12... 17-10-10 12:30 rac1db2 Yes

NPM

22Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

HPE Nimble Storage Oracle Application Data Manager

[root]# noradatamgr --instance xyz123 --list-snapshot --cli

-----------------------------------+---------------+---------+--------+--------

Snap Name Taken at Instance Cloning

Created

possible

using

-----------------------------------+---------------+---------+--------+--------

rac1db2.cli.snap.2 17-10-09 15:04 rac1db2 Yes

CLI

rac1db2.cli.snap.3 17-10-09 15:04 rac1db2 Yes

CLI

rac1db2.cli.snap.4 17-10-09 15:05 rac1db2 Yes

CLI

rac1db2.cli.snap.1 17-10-09 15:03 rac1db2 Yes

CLI

rac1db2.cli.snap.21 17-10-09 18:39 rac1db2 Yes

CLI

List Pfile and Control File

Use these commands to check the pfile and control file of a database instance as captured in a snapshot

taken by NORADATAMGR. If a database instance is cloned from this snapshot, the clone inherits the

parameters from these files. You can use the --override-pfile option while cloning to specify the pfile parameter

values.

Procedure

List a pfile or a control file.

noradatamgr --get-pfile --instance instance_name --snapname snapshot_name

noradatamgr --get-cfile --instance instance_name --snapname snapshot_name

Example

Retrieve a pfile from a snapshot

[root]# noradatamgr --get-pfile --instance rh68db --snapname

rh68db.snap.repl

Pfile contents:

rh68db.__db_cache_size=35567697920

rh68db.__java_pool_size=805306368

rh68db.__large_pool_size=268435456

rh68db.__oracle_base='/u01/app/base'#ORACLE_BASE set from environment

rh68db.__pga_aggregate_target=13555990528

rh68db.__sga_target=40667971584

rh68db.__shared_io_pool_size=0

rh68db.__shared_pool_size=3489660928

rh68db.__streams_pool_size=268435456

*.audit_file_dest='/u01/app/base/admin/rh68db/adump'

*.audit_trail='db'

*.compatible='11.2.0.4.0'

*.control_files='+REDODG/rh68db/controlfile/current.256.921347795'

*.db_block_size=8192

*.db_create_file_dest='+DATADG'

*.db_create_online_log_dest_1='+REDODG'

*.db_domain=''

*.db_name='rh68db'

*.diagnostic_dest='/u01/app/base'

*.dispatchers='(PROTOCOL=TCP) (SERVICE=rh68dbXDB)'

23Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

List Pfile and Control File

*.log_archive_dest_1='LOCATION=+ARCHDG'

*.log_archive_format='%t_%s_%r.dbf'

*.open_cursors=300

*.pga_aggregate_target=13535019008

*.processes=150

*.remote_login_passwordfile='EXCLUSIVE'

*.sga_target=40607154176

*.undo_tablespace='UNDOTBS1'

Retrieve a cfile from a snapshot

[root]# noradatamgr --get-cfile --instance fcrac1db1 --snapname snap.1

Control file contents:

STARTUP NOMOUNT

CREATE CONTROLFILE REUSE DATABASE "FCRAC1DB" NORESETLOGS ARCHIVELOG

MAXLOGFILES 192

MAXLOGMEMBERS 3

MAXDATAFILES 1024

MAXINSTANCES 32

MAXLOGHISTORY 4672

LOGFILE

GROUP 19 '+REDODG/fcrac1db/onlinelog/group_19.266.957110493' SIZE

120M BLOCKSIZE 512,

GROUP 20 '+REDODG/fcrac1db/onlinelog/group_20.264.957110499' SIZE

120M BLOCKSIZE 512,

GROUP 21 '+REDODG/fcrac1db/onlinelog/group_21.268.957110505' SIZE

120M BLOCKSIZE 512,

GROUP 22 '+REDODG/fcrac1db/onlinelog/group_22.267.957110509' SIZE

120M BLOCKSIZE 512,

GROUP 23 '+REDODG/fcrac1db/onlinelog/group_23.265.957110513' SIZE

120M BLOCKSIZE 512,

GROUP 24 '+REDODG/fcrac1db/onlinelog/group_24.263.957110547' SIZE

120M BLOCKSIZE 512

DATAFILE

'+DATADG/fcrac1db/datafile/system.262.955105763',

'+DATADG/fcrac1db/datafile/sysaux.260.955105763',

'+DATADG/fcrac1db/datafile/undotbs1.261.955105763',

'+DATADG/fcrac1db/datafile/users.259.955105763',

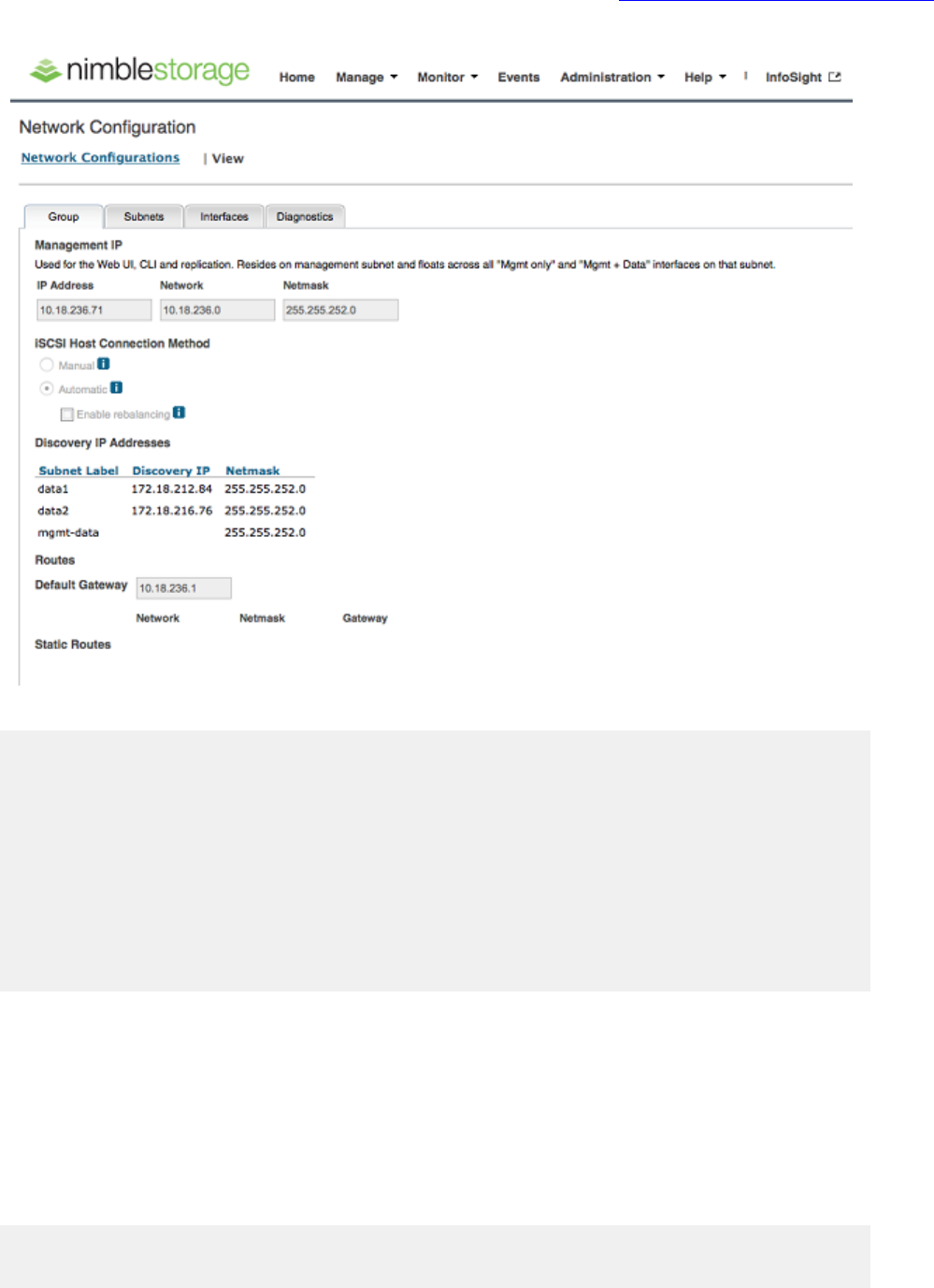

'+DATADG/fcrac1db/datafile/undotbs2.257.955105853',

'+DATADG/fcrac1db/datafile/soe.263.955389549',

'+DATADG/fcrac1db/datafile/undotbs3.264.956339675'

CHARACTER SET WE8MSWIN1252

Clone an Instance

The clone workflow can be used for cloning a local live database instance or cloning from a snapshot of the

database instance that has already been taken.

An instance cloned from a snapshot of a RAC instance will always be a stand-alone instance. The DBA should

manually create a cluster from this cloned instance if required.

When cloning a local live instance, a new snapshot of the instance is taken. This snapshot has a default prefix

of "BaseFor" in its name. This prefix is configurable in /opt/NimbleStorage/etc/noradatamgr.conf. Changes

to noradatamgr.conf require an NLT service restart to take effect.

To provide a snapshot name that has already been taken, the list-snapshots workflow can be invoked. The

required snapshot can then be used to clone the instance. The instance to be cloned can be local or remote.

If the source instance to be cloned is based on a remote host, its volumes must reside on the same group

that the host from which the CLI is invoked is configured to use. Also, this host (from which the CLI is invoked)

must also appear in the list of allowed hosts of the remote instance, for it to be able to clone the instance.

24Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

Clone an Instance

You can also clone only the diskgroups of an instance (without creating a cloned instance containing the

cloned diskgroups). This is useful when you want to restore just a single file from a particular snapshot.

Procedure

Clone an instance.

noradatamgr --clone --instance instance_name --clone-name clone_name [--snapname snapshot_name]

[--clone-sid clone_sid_name] [--clone-home ORACLE_HOME] [--inherit-pfile][--override_pfile

absolute_path_to_pfile] [--folder folder_name] [--diskgroups-only]

• --clone-home - The clone workflow will use this as the ORACLE_HOME for the cloned instance. If you

do not use the --clone-home command and a local instance is being cloned, the cloned instance's

ORACLE_HOME value is set to that of the source instance. If you do not use the --clone-home

command and a remote instance is being cloned, the cloned instance's ORACLE_HOME value is set

to the value of the environment variable $ORACLE_HOME.

• --inherit-pfile - This option allows the cloned instance to inherit all the pfile parameters (except the basic

ones that are needed to bring the cloned instance up) of the source instance captured in the snapshot.

Do not use this option if there is a parameter in the source instance pfile that is incompatible and

prevents the cloned instance from starting.

• --override-pfile - This allows you to override the pfile for the cloned instance. If you do not use the

--override-pfile command, a pfile is generated for the cloned instance. If you use the --override-pfile

command but specify an incorrect pfile value, cloning will fail.

Example

Clone a database instance from local host

(Instance rh68db is running on host-rhel1)

[root@host-rhel1 ~]# noradatamgr --clone --instance rh68db --clone-name

clonedDB --snapname rh68.snap.603 --clone-home

/u01/app/base/product/11.2.0/dbhome_1/

Initiating clone ...

[##################################################] 100%

Success: Cloned instance rh68db to clonedDB.

Diskgroup: CLONEDDBDATADG

Disk: /dev/oracleasm/disks/CLONEDDB0002

Device: dm-8

Volume: clonedDB-DATA2

Serial number: ee11f455cc0e40376c9ce900f4f8b199

Size: 100GB

Disk: /dev/oracleasm/disks/CLONEDDB0001

Device: dm-4

Volume: clonedDB-DATA1

Serial number: 51e1732dbf0abf326c9ce900f4f8b199

Size: 100GB

Diskgroup: CLONEDDBLOGDG

Disk: /dev/oracleasm/disks/CLONEDDB

Device: dm-10

Volume: clonedDB-LOG1

Serial number: 8b999dffa7607ffb6c9ce900f4f8b199

Size: 50GB

Allowed hosts: host-rhel1

[root@host-rhel1 ~]#

[root@host-rhel1 ~]# ps aux|grep pmon

oracle 16983 0.0 0.0 39960408 28976 ? Ss 13:19 0:00

ora_pmon_clonedDB

25Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

HPE Nimble Storage Oracle Application Data Manager

root 18993 0.0 0.0 103324 868 pts/0 S+ 13:21 0:00 grep

pmon

grid 28472 0.0 0.0 1340504 26864 ? Ss Oct20 0:31

asm_pmon_+ASM

oracle 29160 0.0 0.0 39960684 20904 ? Ss Oct20 0:34

ora_pmon_rh68db

[root@host-rhel1 ~]#

[grid@host-rhel1 ~]$ asmcmd lsdg

State Type Rebal Sector Block AU Total_MB Free_MB

Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 4096 1048576 102400 14554

0 14554 0 N ARCHDG/

MOUNTED NORMAL N 512 4096 1048576 204800 89780

0 44890 0 N CLONEDDBDATADG/

MOUNTED EXTERN N 512 4096 1048576 51200 50835

0 50835 0 N CLONEDDBLOGDG/

MOUNTED NORMAL N 512 4096 1048576 204800 89782

0 44891 0 N DATADG/

MOUNTED EXTERN N 512 4096 1048576 10236 10177

0 10177 0 N OCRDG/

MOUNTED EXTERN N 512 4096 1048576 51200 50956

0 50956 0 N REDODG/

Clone a database instance from a remote host

(Instance rh68db is running on host-rhel1 and we clone this instance on

host-rhel2. Please ensure rh68db on host-rhel1 allows host-rhel2 to

list snapshots or clone.)

[root@host-rhel2 ~]# noradatamgr --clone --instance rh68db --clone-name

db603 --snapname rh68.snap.603 --clone-home

/u01/app/base/product/11.2.0/dbhome_1

Initiating clone ...

[##################################################] 100%

Success: Cloned instance rh68db to db603.

Diskgroup: DB603DATADG

Disk: /dev/mapper/mpathy

Device: dm-26

Volume: db603-DATA2

Serial number: 02fcdccbfed7ee536c9ce900f4f8b199

Size: 100GB

Disk: /dev/mapper/mpathz

Device: dm-27

Volume: db603-DATA1

Serial number: 7542d8330bd19cae6c9ce900f4f8b199

Size: 100GB

Diskgroup: DB603LOGDG

Disk: /dev/mapper/mpathaa

Device: dm-28

Volume: db603-LOG1

Serial number: 7fe12c89e12a72926c9ce900f4f8b199

Size: 50GB

Allowed hosts: host-rhel2

[root@host-rhel2 ~]# ps aux|grep pmon

root 5035 0.0 0.0 112644 956 pts/0 S+ 18:09 0:00 grep

--color=auto pmon

grid 9908 0.0 0.0 1343280 24088 ? Ss Oct20 0:35

asm_pmon_+ASM

oracle 11735 0.0 0.0 4442960 16880 ? Ss Oct20 0:37

ora_pmon_rh72db

26Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

HPE Nimble Storage Oracle Application Data Manager

oracle 26029 0.0 0.0 39963196 24896 ? Ss 18:08 0:00

ora_pmon_db603

Clone diskgroups only

ASM diskgroups before cloning:

[grid@host-rhel1 ~]$ asmcmd lsdg

State Type Rebal Sector Block AU Total_MB Free_MB

Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 4096 1048576 102400 14554

0 14554 0 N ARCHDG/

MOUNTED NORMAL N 512 4096 1048576 204800 89782

0 44891 0 N DATADG/

MOUNTED EXTERN N 512 4096 1048576 10236 10177

0 10177 0 N OCRDG/

MOUNTED EXTERN N 512 4096 1048576 51200 50956

0 50956 0 N REDODG/

[grid@host-rhel1 ~]$

Only clone the diskgroups of the specified instance

[root@host-rhel1 ~]# noradatamgr --clone --instance rh68db --clone-name

destDB --snapname rh68.snap.603 --diskgroups-only

Initiating clone ...

[##################################################] 100%

Success: Cloning diskgroups of instance rh68db completed.

DESTDBLOGDG

Disk: /dev/oracleasm/disks/DESTDB

Device: dm-11

Volume: destDB-LOG1

Serial number: 90e8b1d1b0e605b76c9ce900f4f8b199

Size: 50GB

DESTDBDATADG

Disk: /dev/oracleasm/disks/DESTDB0002

Device: dm-12

Volume: destDB-DATA1

Serial number: 0dac7bca494ad93d6c9ce900f4f8b199

Size: 100GB

Disk: /dev/oracleasm/disks/DESTDB0001

Device: dm-13

Volume: destDB-DATA2

Serial number: 9add48220356e99c6c9ce900f4f8b199

Size: 100GB

[root@host-rhel1 ~]#

ASM diskgroups after cloning:

[grid@host-rhel1 ~]$ asmcmd lsdg

State Type Rebal Sector Block AU Total_MB Free_MB

Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 4096 1048576 102400 14554

0 14554 0 N ARCHDG/

MOUNTED NORMAL N 512 4096 1048576 204800 89782

0 44891 0 N DATADG/

MOUNTED NORMAL N 512 4096 1048576 204800 89782

0 44891 0 N DESTDBDATADG/

MOUNTED EXTERN N 512 4096 1048576 51200 50956

0 50956 0 N DESTDBLOGDG/

MOUNTED EXTERN N 512 4096 1048576 10236 10177

0 10177 0 N OCRDG/

MOUNTED EXTERN N 512 4096 1048576 51200 50956

0 50956 0 N REDODG/

27Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

HPE Nimble Storage Oracle Application Data Manager

The 'processes' value was set to 150 for instance rh68db when snapshot was taken.

[root@host-rhel1 14]# noradatamgr --get-pfile --instance rh68db --snapname

rh68db.snap.110

Pfile contents:

rh68db.__db_cache_size=35567697920

rh68db.__java_pool_size=805306368

rh68db.__large_pool_size=268435456

rh68db.__oracle_base='/u01/app/base'#ORACLE_BASE set from environment

rh68db.__pga_aggregate_target=13555990528

rh68db.__sga_target=40667971584

rh68db.__shared_io_pool_size=0

rh68db.__shared_pool_size=3489660928

rh68db.__streams_pool_size=268435456

*.audit_file_dest='/u01/app/base/admin/rh68db/adump'

*.audit_trail='db'

*.compatible='11.2.0.4.0'

*.control_files='+REDODG/rh68db/controlfile/current.256.921347795'

*.db_block_size=8192

*.db_create_file_dest='+DATADG'

*.db_create_online_log_dest_1='+REDODG'

*.db_domain=''

*.db_name='rh68db'

*.diagnostic_dest='/u01/app/base'

*.dispatchers='(PROTOCOL=TCP) (SERVICE=rh68dbXDB)'

*.log_archive_dest_1='LOCATION=+ARCHDG'

*.log_archive_format='%t_%s_%r.dbf'

*.open_cursors=300

*.pga_aggregate_target=13535019008

*.processes=150

*.remote_login_passwordfile='EXCLUSIVE'

*.sga_target=40607154176

*.undo_tablespace='UNDOTBS1'

Create a text file on the host and set the ‘processes’ value to 300

[root@host-rhel1 14]# cat /tmp/my-pfile-new-value.txt

*.processes=300

[root@host-rhel1 14]#

Clone a new instance from rh68db snapshot and override pfile

[root@host-rhel1 14]# noradatamgr --clone --instance rh68db --clone-name

rh68c110 --snapname rh68db.snap.110 --override-pfile

/tmp/my-pfile-new-value.txt

Initiating clone ...

[##################################################] 100%

Success: Cloned instance rh68db to rh68c110.

Diskgroup: RH68C110LOGDG

Disk: /dev/oracleasm/disks/RH68C110

Device: dm-35

Volume: rh68c110-LOG1

Serial number: 109895d6f7fd35486c9ce900f4f8b199

Size: 50GB

Diskgroup: RH68C110DATADG

Disk: /dev/oracleasm/disks/RH68C1100002

Device: dm-34

Volume: rh68c110-DATA2

Serial number: 0b91b7feee77e1856c9ce900f4f8b199

Size: 100GB

Disk: /dev/oracleasm/disks/RH68C1100001

28Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

HPE Nimble Storage Oracle Application Data Manager

Device: dm-33

Volume: rh68c110-DATA1

Serial number: 6e9f5eef661518d36c9ce900f4f8b199

Size: 100GB

Allowed hosts: host-rhel1

Verify ‘processes’ value in cloned instance parameter file

[root@host-rhel1 14]# su - oracle

[oracle@hiqa-sys-rhel1 ~]$ . oraenv

ORACLE_SID = [rh68db] ? rh68c110

The Oracle base has been set to /u01/app/base

[oracle@hiqa-sys-rhel1 ~]$ sqlplus / as sysdba

SQL*Plus: Release 11.2.0.4.0 Production on Tue Nov 15 14:41:16 2016

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit

Production

With the Partitioning, Automatic Storage Management, OLAP, Data Mining

and Real Application Testing options

SQL> show parameter processes

NAME TYPE VALUE

--------------------------------- --------- ------------------------------

aq_tm_processes integer 1

db_writer_processes integer 3

gcs_server_processes integer 0

global_txn_processes integer 1

job_queue_processes integer 1000

log_archive_max_processes integer 4

processes integer 300

SQL>

Delete a Snapshot of an Instance

Use this command to delete a snapshot of an instance. If this snapshot was created using the --replica option

in the snapshot CLI, you can also use this command to delete the replicated snapshot, if one exists.

Procedure

Delete a snapshot of an instance.

noradatamgr --delete-snapshot --instance instance_name --snapname snapshot_name [--delete-replica]

Example

Delete Snapshots of a Given Instance

[root]# noradatamgr --list-snapshot --instance rh68db

-----------------------------------+--------------+--------+-------------------

Snap Name Taken at Instance Usable for

cloning

-----------------------------------+--------------+--------+-------------------

rh68.snap.603 16-10-24 12:01 rh68db Yes

29Copyright

©

2019 by Hewlett Packard Enterprise Development LP. All rights reserved.

Documentation Feedback: [email protected]

Delete a Snapshot of an Instance

rh68db.snap.601 16-10-21 10:36 rh68db Yes

rh68db.snap.600 16-10-20 14:48 rh68db Yes

rh68db.snap.602 16-10-21 15:21 rh68db Yes

Delete a Specified Snapshot

[root]# noradatamgr --delete-snapshot --instance rh68db --snapname

rh68db.snap.602

Success: Snapshot rh68db.snap.602 deleted.

Verify the Specified Snapshot is Removed

[root]# noradatamgr --list-snapshot --instance rh68db

-----------------------------------+--------------+--------+-------------------

Snap Name Taken at Instance Usable for

cloning

-----------------------------------+--------------+--------+-------------------

rh68.snap.603 16-10-24 12:01 rh68db Yes

rh68db.snap.601 16-10-21 10:36 rh68db Yes

rh68db.snap.600 16-10-20 14:48 rh68db Yes

Destroy a Cloned Instance

This workflow destroys a cloned database instance with the specified SID. The database to be destroyed

must be based on cloned volumes.

The workflow completes these activities:

1

Deletes the database instance

2

Deletes the underlying diskgroups in the database instance

3

Attempts to delete the volume collection associated with the volumes that back the disks for the instance

Procedure

Destroy a cloned database instance.

noradatamgr --destroy --instance instance_name

Example

Destroy a cloned database instance.

[root]# noradatamgr --destroy --instance DESTDB

Instance DESTDB deleted.

Do you really want to proceed with destroying the

instance/diskgroup?(yes/no)yes

Diskgroup DESTDBDATADG deleted.

Diskgroup DESTDBLOGDG deleted.

Success: Instance DESTDB and its backing Nimble volumes cleaned up

successfully.

Destroy an ASM Diskgroup

To destroy a diskgroup, the Oracle instance using that diskgroup must be shut down, and no active processes

can be reading from or writing to the diskgroup. You can only destroy a diskgroup based on HPE Nimble

Storage volumes that have been cloned.